🗓️ This Week In AI Research (14-20 December 25)

The top 10 AI research papers that you must know about this week.

1. NVIDIA Nemotron 3

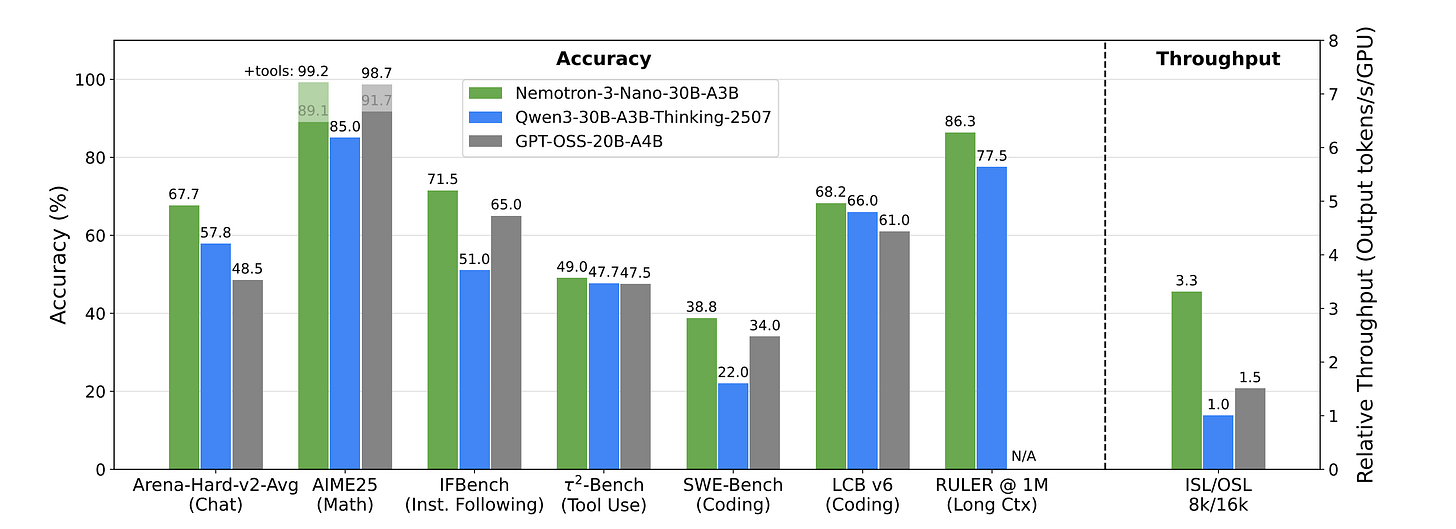

NVIDIA has launched the Nemotron 3 family of open AI models in three sizes (Nano, Super, and Ultra). These models are designed to build efficient multi-agent AI systems.

Nemotron 3 Nano uses a hybrid Mamba-Transformer mixture-of-experts (MoE) architecture that delivers up to 4× higher throughput than its predecessor and reduces inference costs by up to 60%.

It achieves up to 3.3× higher inference throughput than similarly-sized open models like GPT-OSS20B and Qwen3-30B-A3B-Thinking-2507, while also being more accurate on popular benchmarks.

The model comes with a 1-million-token context window and supports deep multi-document reasoning and long-running agent memory.

The Nano model is available now through various platforms, while Super and Ultra versions are expected in the first half of 2026.

Read more about this research using this link.

Before we move forward, I want to introduce you to the Visual Tech Bundle.

It is a collection of visual guides that explain core AI, LLM, Systems design, and Computer science concepts via image-first lessons.

Others are already loving these books.

This includes Dharmesh Shah, the co-founder and CEO of HubSpot.

Why not give them a try?

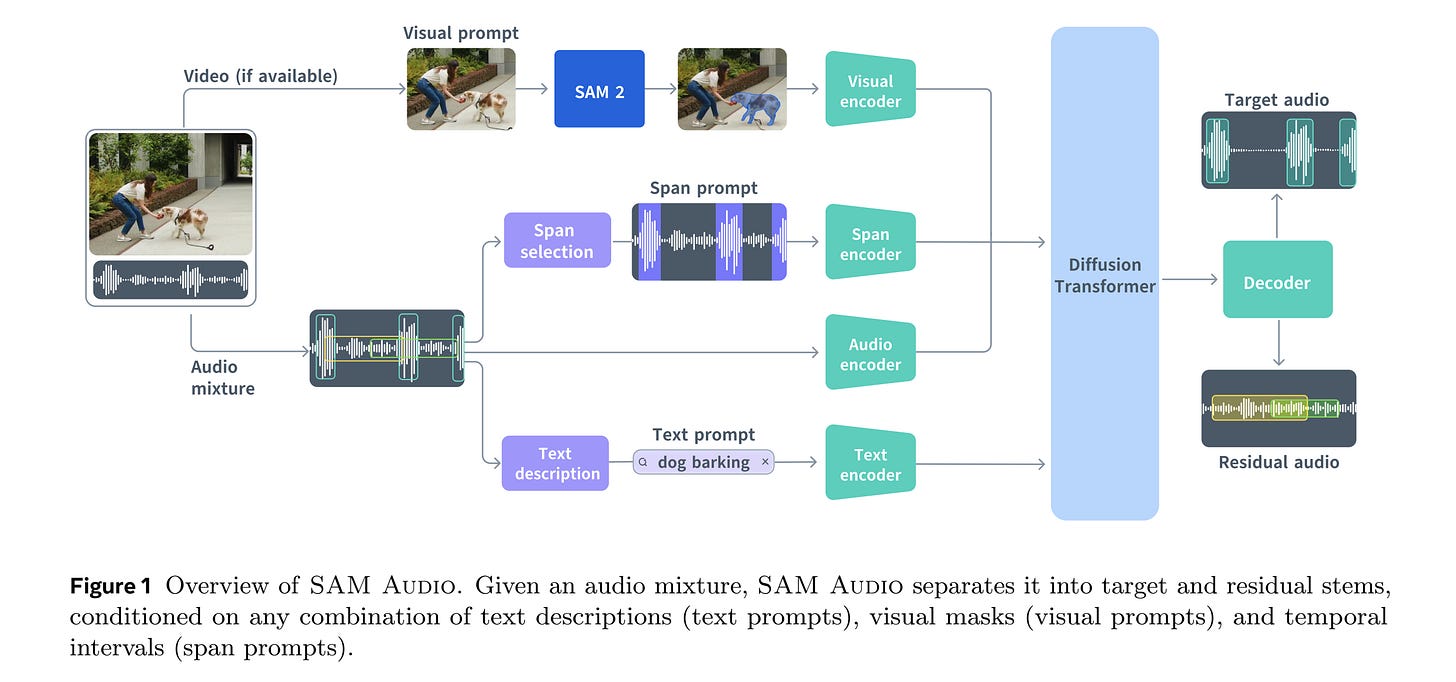

2. SAM Audio

In this research, Meta introduces SAM Audio, a foundational model that can separate any sound from an audio mixture using flexible prompts such as text descriptions, time segments, or visual masks from video.

The model is built on a diffusion transformer architecture and trained with flow matching on large-scale audio data spanning speech, music, and general sounds.

It achieves state-of-the-art performance across a wide range of benchmarks, including general sound, speech, music, and musical instrument separation in both in-the-wild and professionally produced audios.

Read more about this research using this link.

3. The Co-Pilot Usage Report 2025

This Microsoft report on Co-Pilot usage is one of the largest AI customer usage studies published to date, analyzing 37.5 million de-identified conversations.

Its main findings are as follows:

On mobile, "Health & Fitness" is the no. 1 topic at every hour and month.

On desktop, "Work & Career" overtakes "Technology" as the top topic from 8 am to 5 pm.

Programming queries spike on weekdays while gaming conversations rise on weekends.

Philosophy and religion conversations rise late into the night and through dawn.

Relationship conversations surge on Valentine's Day, with "Personal Growth" peaking before it.

Usage shifted from a programming-heavy, productivity focus in January toward society, culture, and social topics by September.

Travel conversations rise during business hours across all devices.

Entertainment shows a U-curve pattern, high at night but dropping during work hours.

Users see AI as a professional coworker at their desk and a personal confidant on their phone.

Read more about this report using this link.

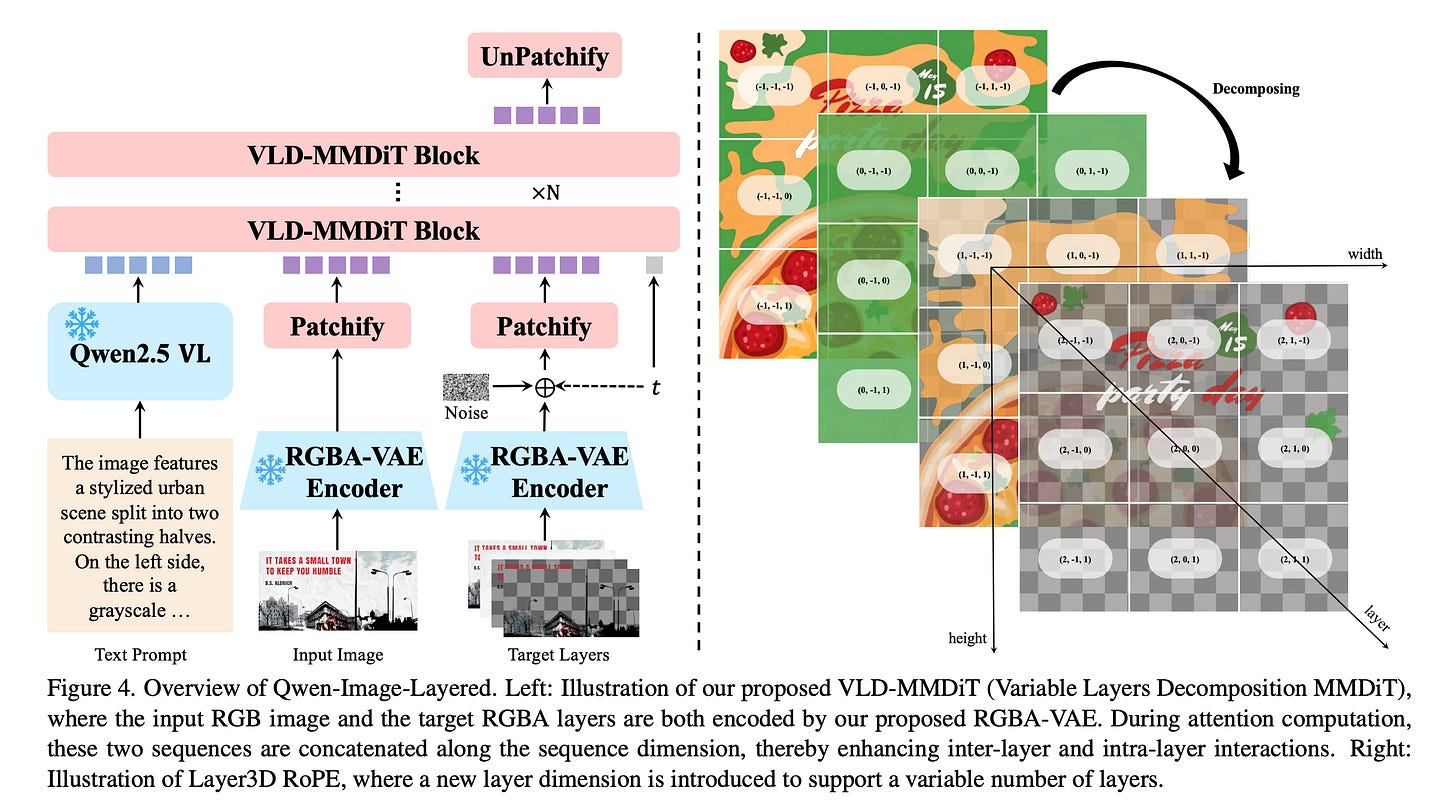

4. Qwen-Image-Layered

This research from Alibaba introduces Qwen-Image-Layered, a new end-to-end diffusion-based model that automatically decomposes an RGB image into separate RGBA layers, each representing a different object/ region with its own transparency.

Each RGBA layer can be edited independently without affecting other layers.

The model is built around three key components:

an RGBA-VAE that aligns standard RGB images with layered RGBA representations

a VLD-MMDiT architecture designed to decompose a variable number of layers

a multi-stage training strategy that adapts an existing image generation model into an effective layer-decomposition model

Read more about this research using this link.

5. NitroGen: An Open Foundation Model for Generalist Gaming Agents

This research from NVIDIA introduces NitroGen, a vision-action foundation model designed to train game-playing agents that can generalize across many different video games.

The model is trained on 40,000 hours of gameplay videos across more than 1,000 games.

It shows strong abilities across many game types, including combat in 3D action games, precise control in 2D platformers, and exploration in procedurally generated worlds.

It also generalizes well to new, unseen games, achieving up to a 52% improvement in task success rates compared to models trained from scratch.

Read more about this research using this link.

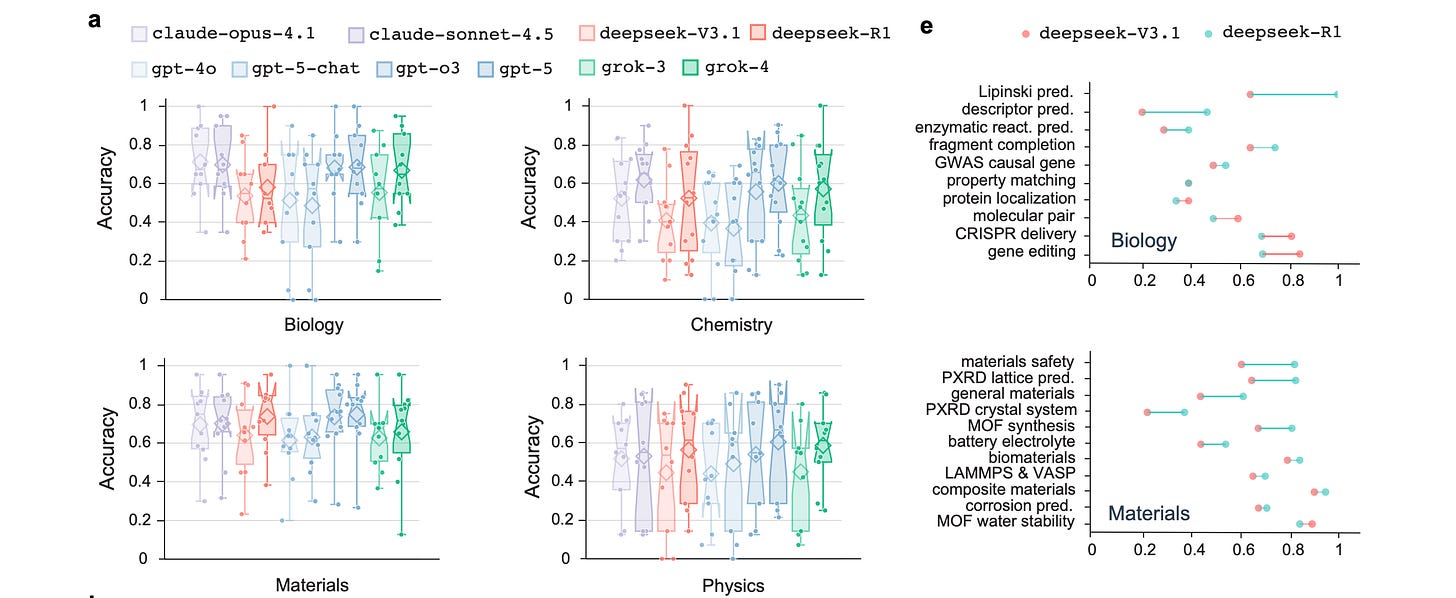

6. Evaluating Large Language Models in Scientific Discovery

The authors of this research paper introduce a new benchmark for evaluating how well LLMs support real scientific discovery across biology, chemistry, materials, and physics, beyond simply answering isolated factual questions.

Models are evaluated at two levels:

Their accuracy on scenario-specific questions, and

Their overall project-level performance on proposing testable hypotheses, designing experiments, and interpreting outcomes

Results show that current frontier LLMs perform worse on this benchmark than on standard science tests.

They gain limited benefits from scaling and share common weaknesses across providers, indicating they are still far from true scientific superintelligence.

Despite their limitations, LLMs already show promise across many scientific discovery tasks, suggesting that guided exploration and serendipity might still play important roles in scientific discovery.

Read more about this research using this link.

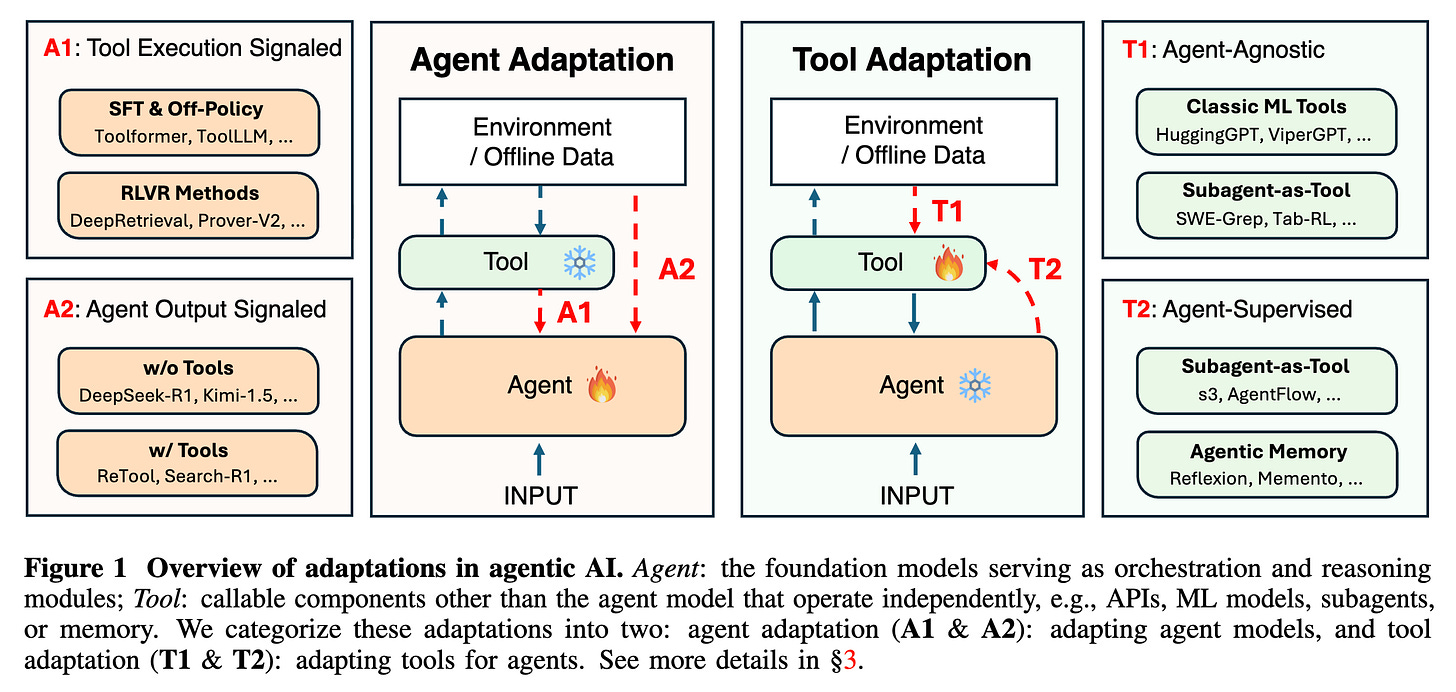

7. Adaptation of Agentic AI

This research paper introduces a framework for adapting agentic AI systems.

It organizes existing adaptation strategies into a systematic taxonomy covering both

Agent adaptations (how an agent’s internal behavior is adjusted)

Tool adaptations (how external tools are tuned to work with agents)

The framework helps clarify the design space of current approaches, compare their strengths and limitations, and guide practical decisions when building or evolving agent-based AI systems.

Read more about this research using this link.

8. Memory in the Age of AI Agents: A Survey

This research paper surveys the rapidly growing field of memory systems for AI agents and proposes a framework for understanding and categorizing them.

It provides a clear overview of agent memory by distinguishing it from related ideas such as LLM memory, RAG, and context engineering, and by introducing a unified framework based on forms, functions, and dynamics.

It identifies three main memory forms (token-level, parametric, latent), three key functions (factual, experiential, working), and analyzes how memory is created, updated, and retrieved over time (dynamics).

The work also summarizes existing benchmarks and tools, and outlines future directions such as reinforcement learning integration, multimodal and multi-agent memory, and trustworthiness.

Read more about this research using this link.

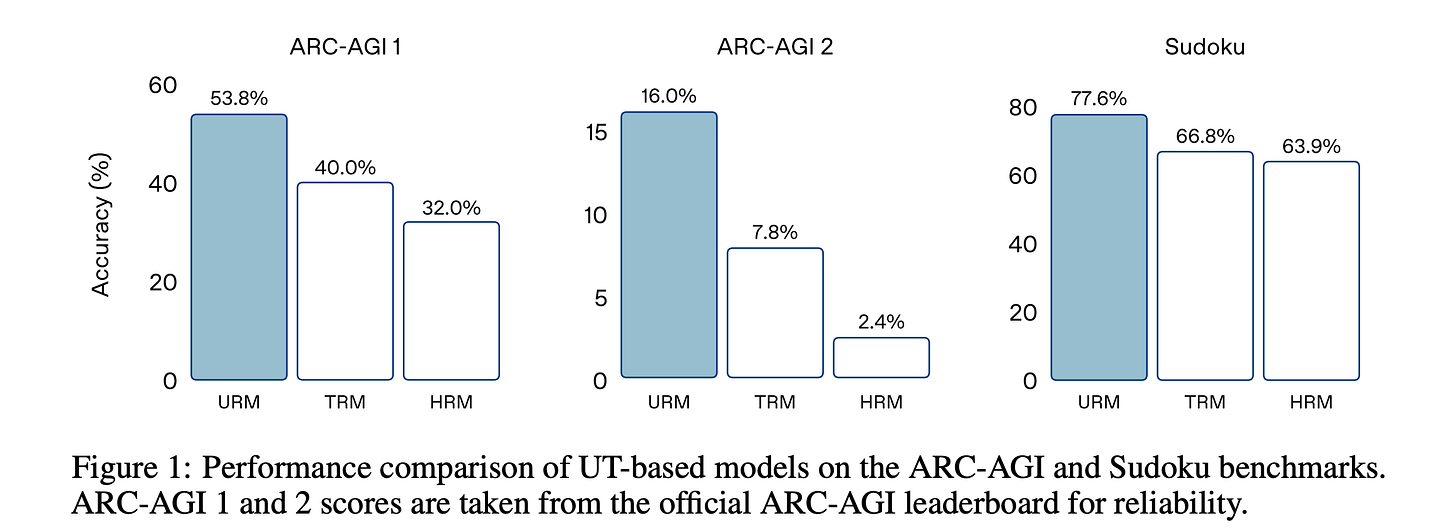

9. Universal Reasoning Model

This research paper analyzes variants of Universal Transformers (UTs) and shows that their performance gains on ARC-AGI mainly come from the recurrent inductive bias and strong nonlinear components of the Transformer, rather than from complex architectural designs.

Motivated by this finding, the authors propose the Universal Reasoning Model (URM), which enhances a UT with short convolution and truncated backpropagation.

This design significantly improves reasoning performance, achieving state-of-the-art results of 53.8% pass@1 on ARC-AGI 1 and 16.0% pass@1 on ARC-AGI 2.

Read more about this research paper using this link.

Read more about HRM and TRM using the following links.

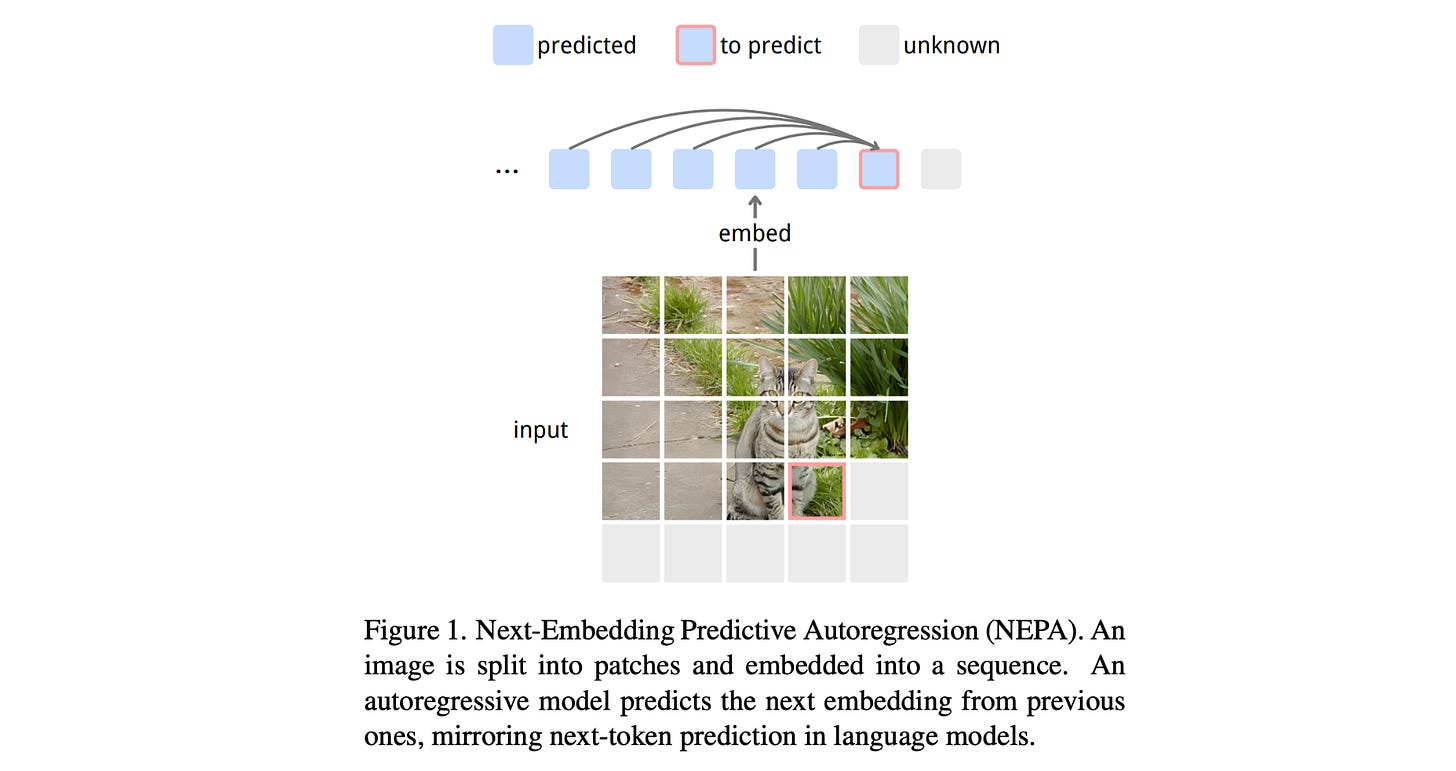

10. Next-Embedding Prediction Makes Strong Vision Learners

This research introduces a new self-supervised learning method for vision models called Next-Embedding Predictive Autoregression (NEPA).

This method lets a model learn by predicting future patch embeddings from past ones, rather than reconstructing pixels or using complex losses.

Using this approach with a simple Transformer, the method achieves strong performance on ImageNet-1K (approx. 84% top-1 accuracy) and transfers well to semantic segmentation tasks on ADE20K.

Read more about this research using this link.

This article is entirely free to read. If you loved reading it, restack and share it with others. 💚

If you want to get even more value from this publication, become a paid subscriber and unlock all posts.

You can also check out my books on Gumroad and connect with me on LinkedIn to stay in touch.

Great roundup of recent research. The Universal Reasoning Model findings are fascinating because it shows that recurrent inductive bias matters more than architectural complexity. I'm working on similar problems and the idea that truncated backprop plus short convolutions can get you to 53.8% on ARC-AGI is kinda wild. The gap between ARC-AGI 1 and 2 performance (53.8% vs 16%) also hints that generalziation to truly novel tasks is still the real bottleneck, not just parameter count or training data.