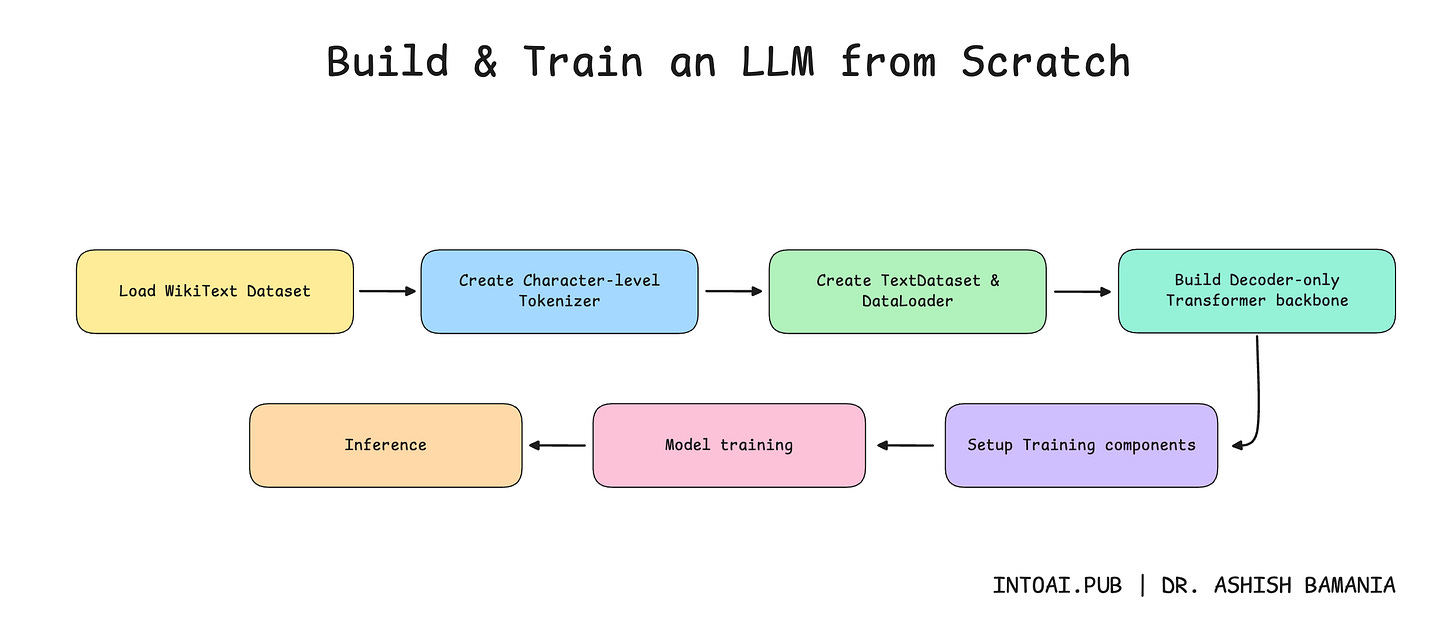

Build and Train an LLM from Scratch

An end-to-end guide to training an LLM from scratch to generate text.

🎁 Become a paid subscriber to ‘Into AI’ today at a special 25% discount on the annual subscription.

In the previous lesson on ‘Into AI’, we learned how to implement the complete Decoder-only Transformer from scratch.

We then learned how to build a tokenizer for the model.

It’s now time to move forward and train our text-generation model.

Let’s begin!

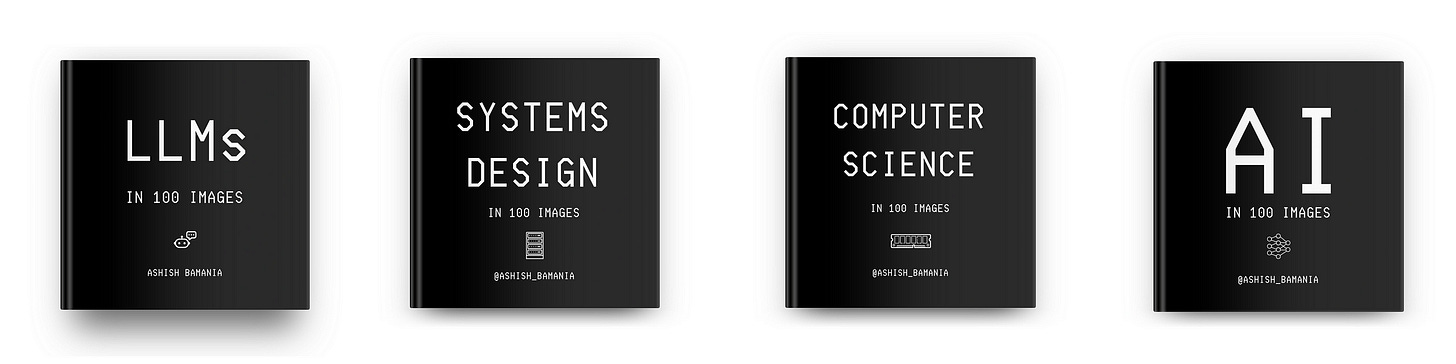

Before we move forward, I want to introduce you to the Visual Tech Bundle.

It is a collection of visual guides that explain core AI, LLM, Systems design, and Computer science concepts via image-first lessons.

Others are already loving these books.

This includes Dharmesh Shah, the co-founder and CEO of HubSpot.

Why not give them a try?

Now back to our lesson!

Getting our data ready

1. Download the dataset

We will use the WikiText dataset from Hugging Face to train our model. This dataset is derived from verified Wikipedia articles and contains approximately 103 million words.

It is downloaded as follows.

from datasets import load_dataset

# Load WikiText dataset ("train" subset)

dataset = load_dataset("wikitext", "wikitext-103-v1", split="train")Let’s check out a training example from this dataset.

# Training example

print(dataset['text'][808])

"""

Output:

Organisations in the United Kingdom ( UK ) describe GA in less restrictive terms that include elements of commercial aviation . The British Business and General Aviation Association interprets it to be " all aeroplane and helicopter flying except that performed by the major airlines and the Armed Services " . The General Aviation Awareness Council applies the description " all Civil Aviation operations other than scheduled air services and non @-@ scheduled air transport operations for remuneration or hire " . For the purposes of a strategic review of GA in the UK , the Civil Aviation Authority ( CAA ) defined the scope of GA as " a civil aircraft operation other than a commercial air transport flight operating to a schedule " , and considered it necessary to depart from the ICAO definition and include aerial work and minor CAT operations .

"""We concatenate all non-empty, stripped text lines from the training dataset, separating them with double newlines.

training_text = "\n\n".join([text.strip() for text in dataset['text'] if text.strip()])A short subset of training_text is shown below.

# Examine a subset of 'training_text'

training_text[12003:12400]

"""

Output:

was initially recorded using orchestra , then Sakimoto removed elements such as the guitar and bass , then adjusted the theme using a synthesizer before redoing segments such as the guitar piece on their own before incorporating them into the theme . The rejected main theme was used as a hopeful tune that played during the game 's ending . The battle themes were designed around the concept of a

"""The length of training_text is shown below.

print(f"Training text length: {len(training_text):,} characters")

# Output: Training text length: 535,923,053 characters2. Tokenize the dataset

It’s time to tokenize the dataset using the Tokenizer that we created in the last lesson.

# Character level tokenizer

class Tokenizer:

def __init__(self, text):

# Special token for characters not in vocabulary

self.UNK = "<UNK>"

# All characters in vocabulary

self.chars = sorted(list(set(text)))

self.chars += [self.UNK]

# Vocabulary size

self.vocab_size = len(self.chars)

# Mapping from character to ID

self.char_to_id = {char: id for id, char in enumerate(self.chars)}

# Mapping from ID to character

self.id_to_char = {id: char for id, char in enumerate(self.chars)}

# Convert text string to list of token IDs

def encode(self, text):

return [self.char_to_id.get(ch, self.char_to_id[self.UNK]) for ch in text]

# Convert list of token IDs to text string

def decode(self, ids):

return "".join(self.id_to_char.get(id, self.UNK) for id in ids)This is how we do this.

# Create an instance of tokenizer

tokenizer = Tokenizer(training_text)

# Vocabulary size

vocab_size = tokenizer.vocab_size

print(f"Vocabulary size: {vocab_size}")

# Output: Vocabulary size: 1251Note that the vocabulary size is 1251 characters. Given that there are only 26 letters in the English language, you might wonder why the vocabulary size is so big.

Let’s peek through the vocabulary to see some of the characters it contains.

print(" ".join(tokenizer.chars[800:850]))

"""

Output:

⅓ ⅔ ⅛ ⅜ ⅝ ⅞ ← ↑ → ↓ ↔ ↗ ↦ ↪ ⇄ ⇌ ∀ ∂ ∆ ∈ ∑ − ∕ ∖ ∗ ∘ √ ∝ ∞ ∩ ∪ ∴ ∼ ≈ ≠ ≡ ≢ ≤ ≥ ≪ ⊂ ⊕ ⊗ ⊙ ⊥ ⋅ ⋯ ⌊ ⌋ ①

"""3. Create a dataset required for language modeling

Once we have tokenized our dataset, we need to load and serve the data during training. This is where the TextDataset class comes in, which inherits from PyTorch's Dataset class, and has the following methods:

__init__: Tokenizes given text and calculates how many fixed-length training sequences can be created from it, each with a maximum sequence lengthmax_seq_length__len__:__getitem__: Returns a training sequence and its targets (tokens shifted forward by one position) at a given index